The Slow And Boring Way That I Get Facebook Ads To Work Well

I 'audit' a lot of FB ads accounts, that means I get to see what is and isn’t working, and also spot patterns in terms of common mistakes made.

One of the least sexy bits of advertising is testing - but it’s also pretty much the sure-fire road to consistent improvement.

One of my very first clients initially signed up with me just to ‘freshen up the ads’ - they ran their ads in-house, but fancied getting an expert opinion on how they could step them up a notch.

In the 1 month I had access to their account - I set up a bunch of tests, and when I came back into the account, this time long-term, I could see the result.

In one of the campaigns, the single test I made (using Lead forms instead of a conversion ad) had brought in an extra £4200 from a £25 per day ad spend.

That was without long-term optimisation, and just with one test. It's worth doing.

Here are the 9 most common mistakes I’ve seen businesses make when it comes to testing, and then tips on how I would recommend you should be testing.

Most common mistakes made:

Not testing - just putting stuff out there and leaving it.

Clearly, if you never test stuff, you’re never going to improve your results- this typically comes down to business owners just not having time, but even big clients - such as an influencer brand I worked with who were spending £200+ a day, are guilty of this.

Not being systematic - throwing out loads of different stuff and then not knowing how to analyse it or what to do with the info.

No better than not testing really - a scattergun approach might get a win or two, but if you don’t understand why or how you did it, then you can’t replicate the win - An e-commerce store owner had this problem, he would get great results from one campaign out of every 10, and never know which one it would be.

Sending the traffic somewhere useless anyway - i.e. optimising for lower link clicks, but then sending people to your homepage.

I’ve seen people optimise their ads endlessly, but continue to send them to a boring, unfocussed landing page - I even audited a charity who’s ads were being run by an agency doing this, they were getting nice low numbers for their reports, and then blaming the lack on conversions on the charity’s follow up team.

Not testing towards the final objective.

Conversion ads are really powerful, but people often stick with traffic ads, focussing on Click-through-rates rather than opt-ins or sales.

Running tests at the ad level, not the ad set level

I don’t know why, but gyms seem very culpable to this mistake - they whack a whole bunch of different ad images at the ad level, but the way that FB chooses a winner is flawed, and you end up with one or two images getting all the impressions, and other’s seemingly ignored.

Using a saved audience, and sticking with it.

I audited an SEO company’s ads - they had a clear picture of their avatar, and created a saved audience of interests that were all business/marketing/SEO related. All their ads went to this same audience, and it meant that they had no idea which of those interests actually worked best.

Not testing a content-first funnel.

A lot of B2B clients were guilty of this, going straight for a consult call rather than using content at the 'Top Of Funnel’ to build a relationship first.

Short-term thinking - testing is the slow, boring route to guaranteed success

I had an e-commerce client for a good few months, but every time we starting making any progress in terms of optimisation - he would want to throw a big sale out there, or try a whole new product range - and it killed all progress we were making. Don’t rely on discounts, and don’t expect to have it all ticking over perfectly after 2 weeks.

Testing stuff, but not turning off the worst performers.

This is a weirdly common thing, and a driving instructor training business was very guilty of this - they would set up a bunch of different ad sets with different variables in, but then leave them all running for the duration of the camapaign. This defeats the whole purpose.

Some tips I have

When you are starting from fresh, I recommend starting with 'best practice’ as much as you can, and running an initial 2v2v2 creative test -This is 8 ad sets, with 2 variations of headline, ad copy, and visual being tested.Set them all up, then start killing off the worst performers one by one after an initial 48 hour period.

When you’ve done that, you have a winner, and can continue to test as below.

- Headline 1 - Immediate benefit

- Headline 2 - Deeper benefit

- Copy 1 - Short - Who, What, Why, CTA

- Copy 2 - Long - Add testimonial.

- Visual 1 - Image of the product/service

- Visual 2 - Image of your target audience

Then with 8 adsets:

- Adset 1: H1C1V1

- Adset 2: H1C2V1

- Adset 3: H2C1V1

- Adset 4: H2C2V1

- Adset 5: H1C1V2

- Adset 6: H1C2V2

- Adset 7: H2C1V2

- Adset 8: H2C2V2

Choose a winner from those 8, then proceed down the list, one at a time.

Test the things that make the biggest impact first

The visuals:

- video VS image VS slideshow VS carousel

- image type

The headline:

- Question vs feature vs benefit

- Short vs long

- Positive vs negative

The offer.

The body copy:

- Long vs short

- With/without testimonial

- With/without scarcity

- With/without CTA

The targeting:

- Different Interests

- Different custom audiences

- Different lookalikes

A landing page VS a Lead Form

Different objectives (traffic vs conversion vs engagement).

Always test at the ad set level.

Know what metrics to focus on.

- Primary objective - i.e. cost per sale/lead/sign up

- Then look at CTR & CPM, if your lead cost is too high to get good data.

Give the ads 48 hours first before making ANY changes (unless you spot an error, or it is massively clear that one variable is utterly shit)

If you have a short timeframe - then start with lots of variables at a high budget, and remove them in quick succession. For example, if you have a £400 budget, and only a week to run the ads - then start with 8 ad sets, with each one set to £20 a day. This sounds stupid, because £20 a day X 8 ad sets X 7 days = £1120. But what you do is wait for an initial 36/48 hours, then aggressively kill off each worst performer, leaving you with just the best one or two pretty early on.

Does that make sense? It's not exciting, but it does work.

Feel free to drop questions below in the comments or reach out at [email protected] if you have any questions.

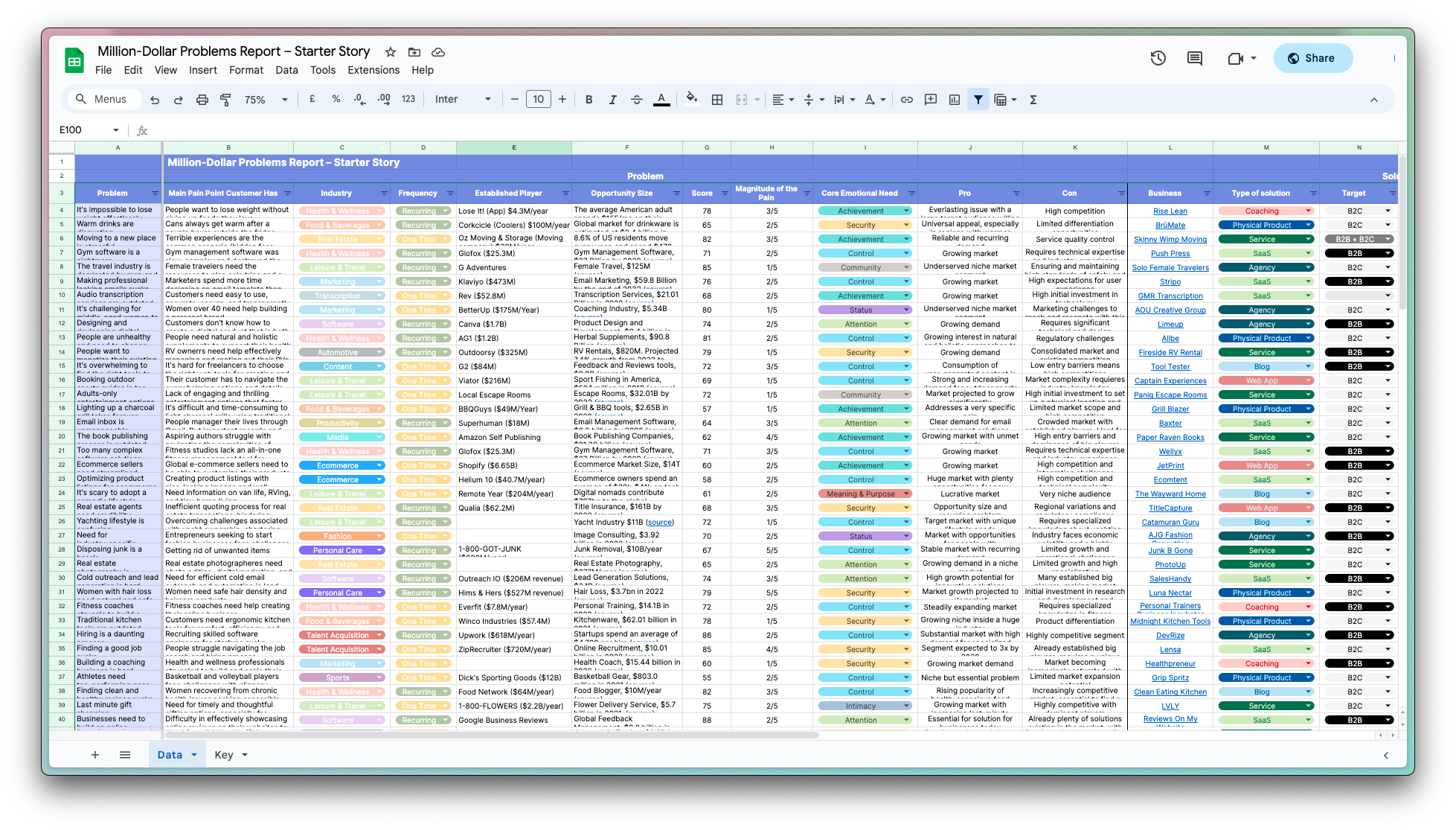

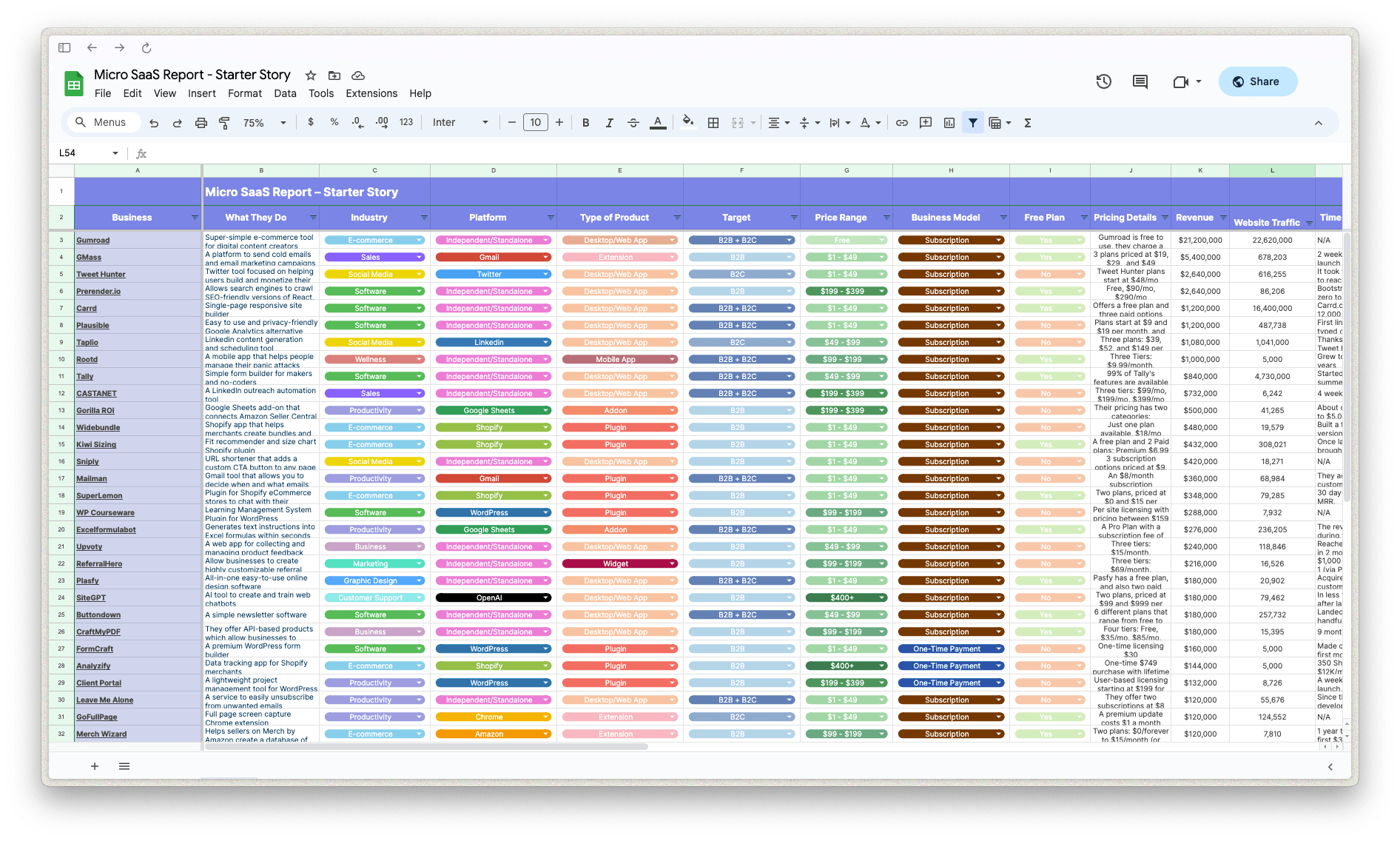

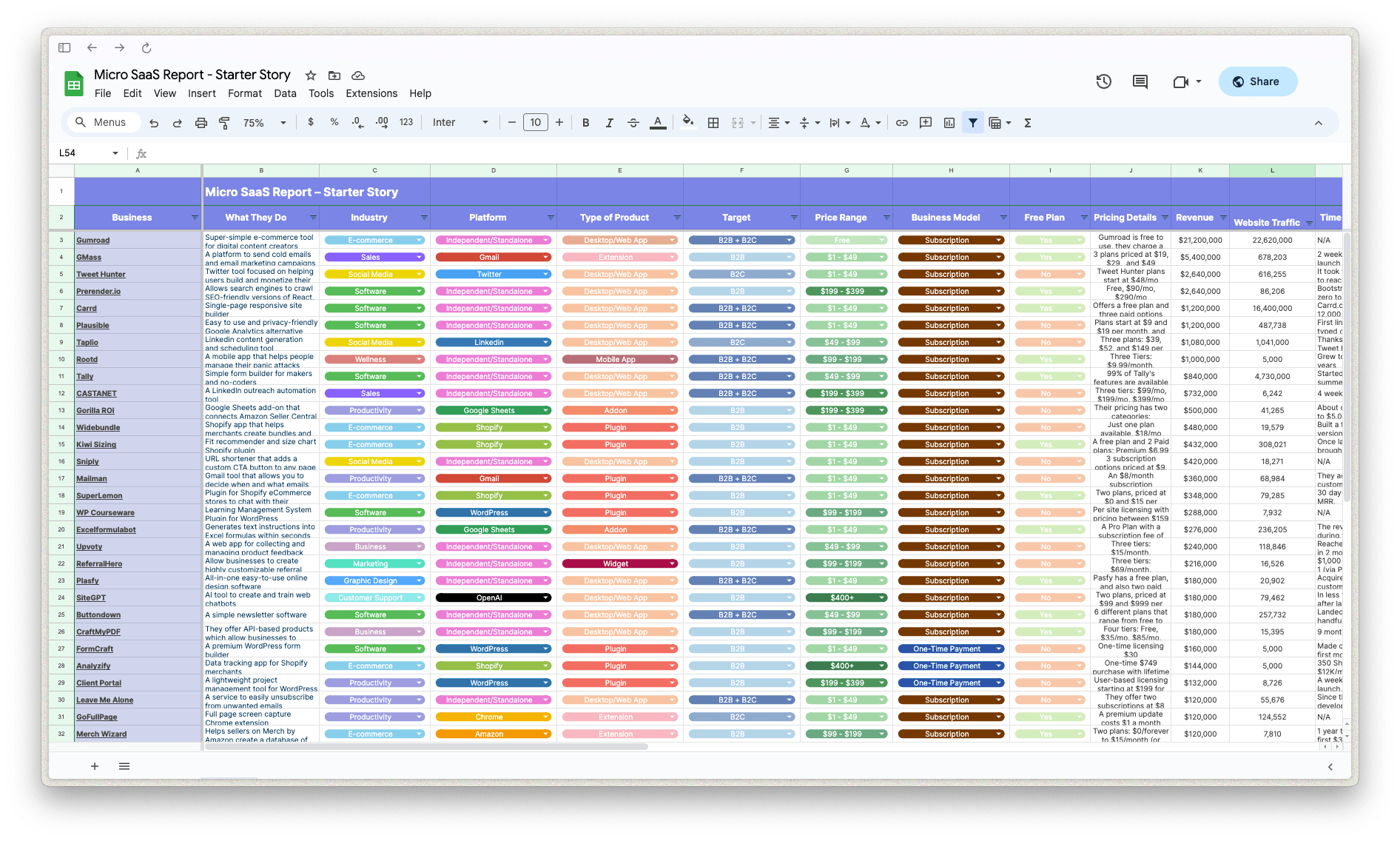

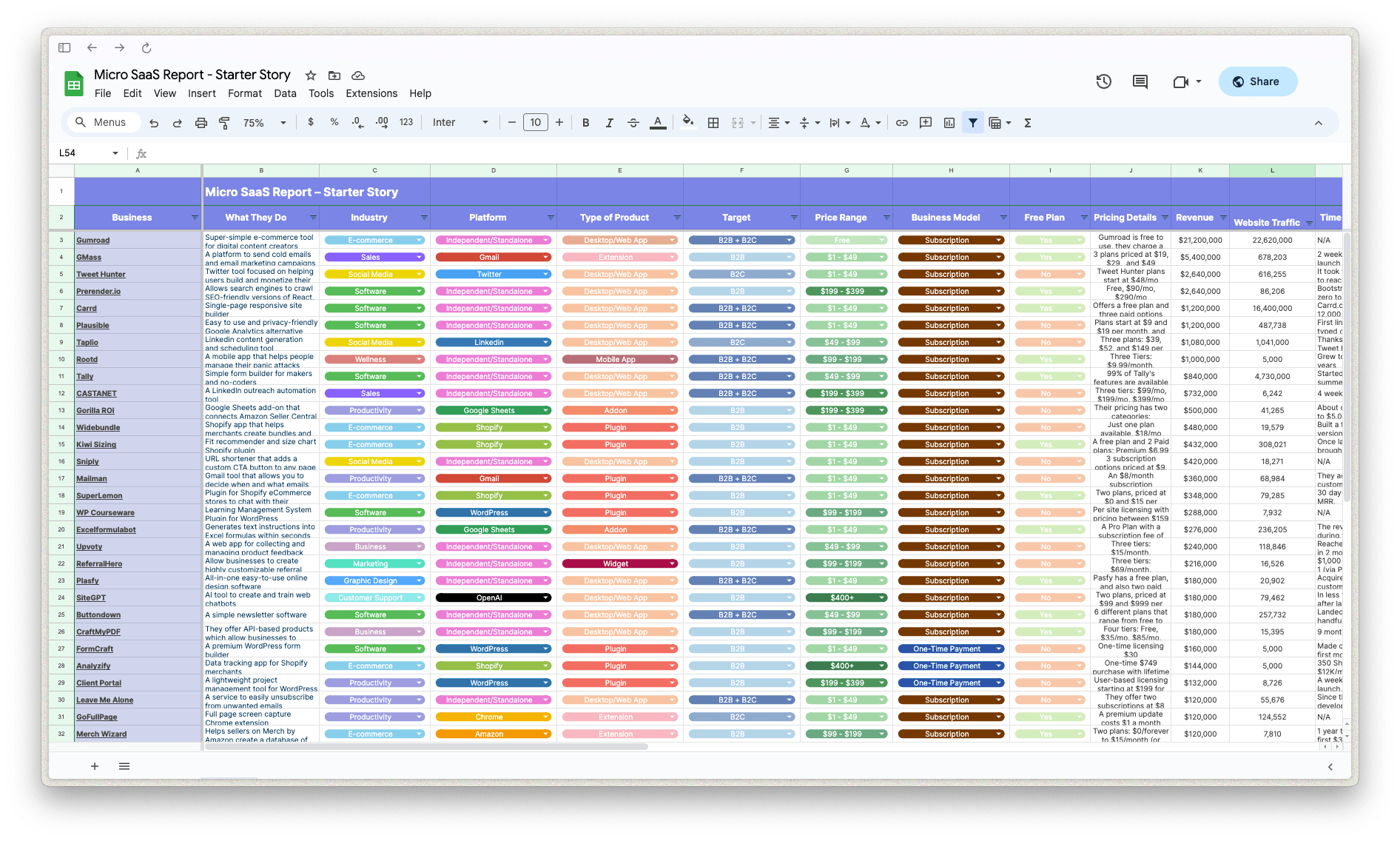

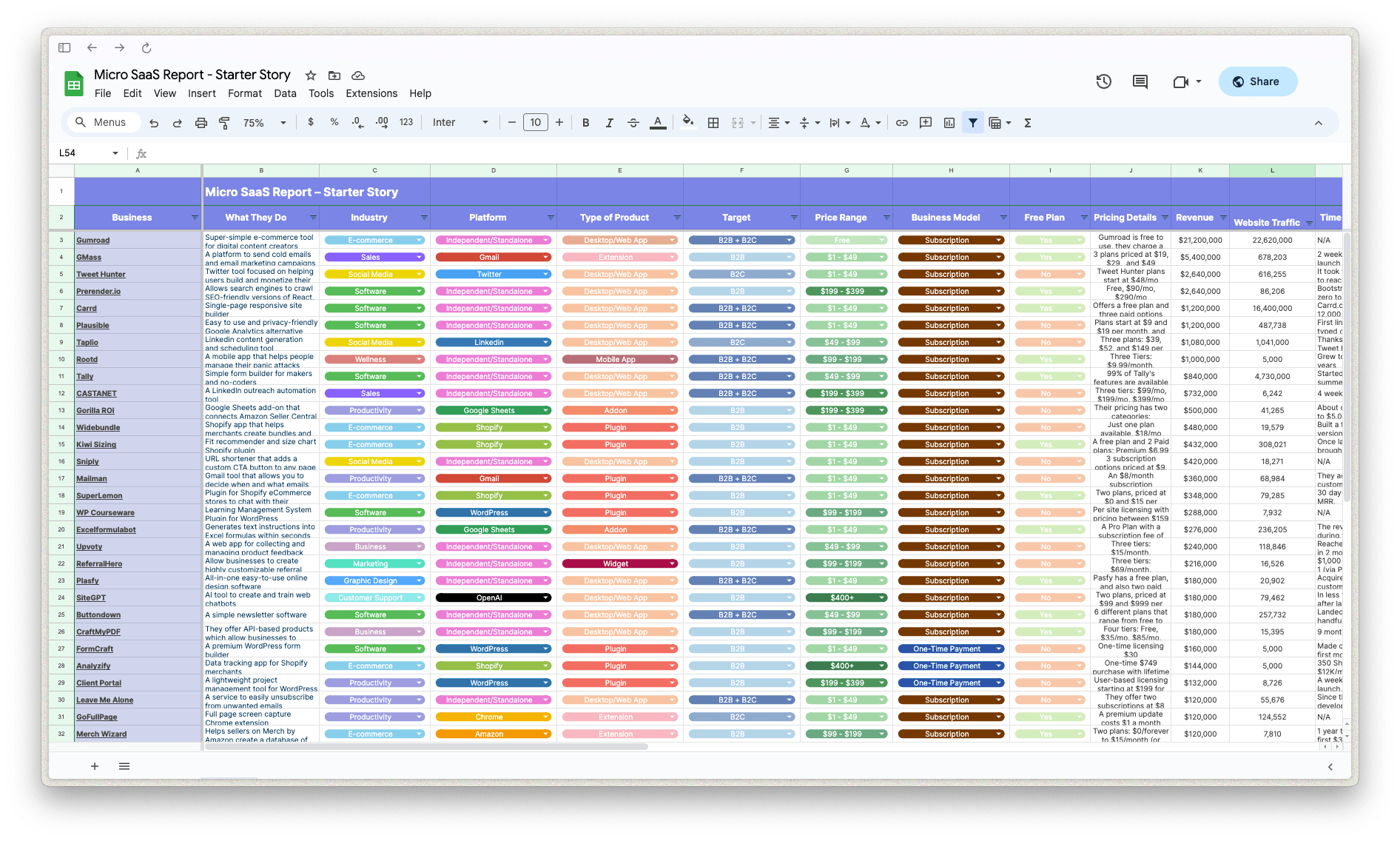

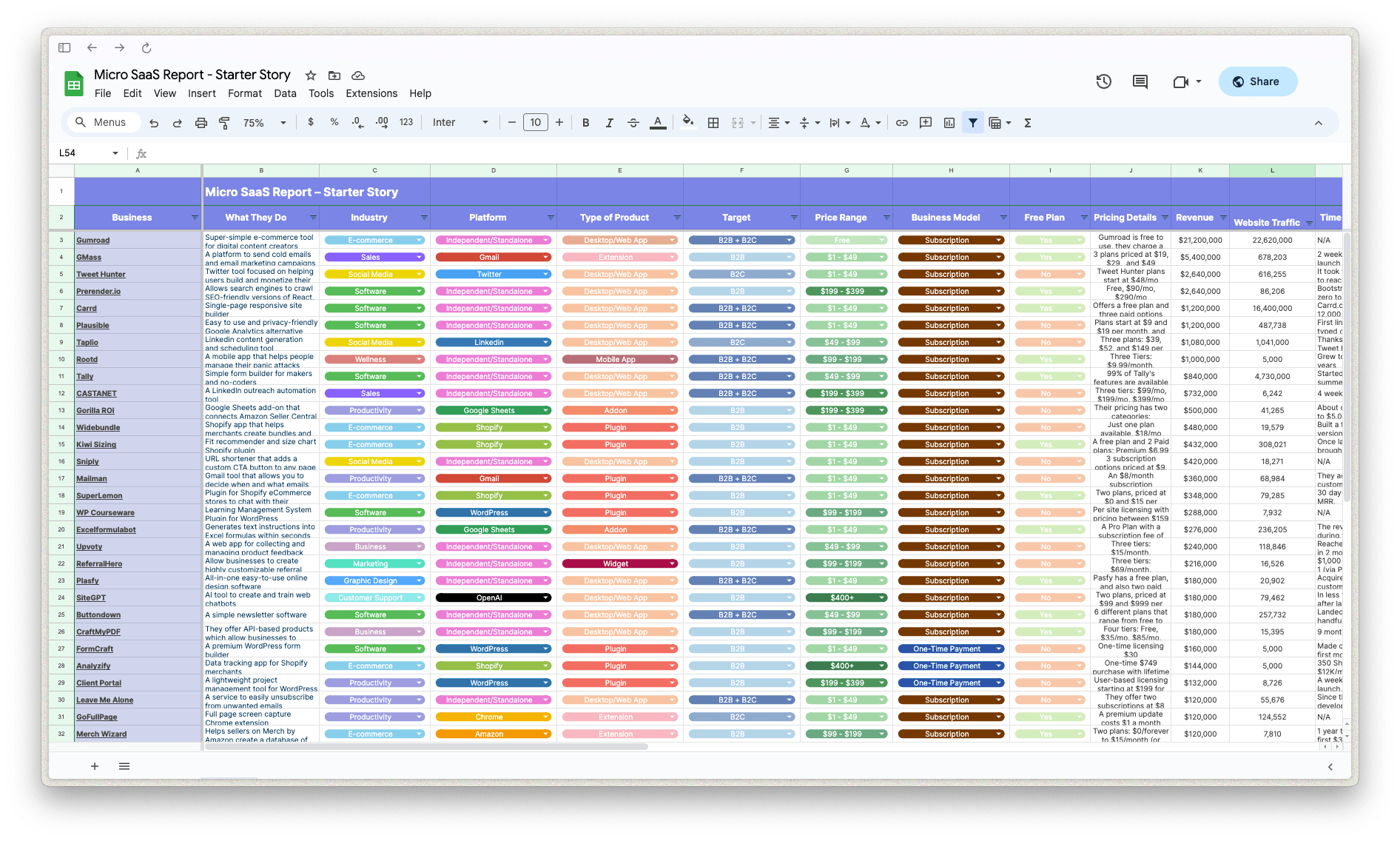

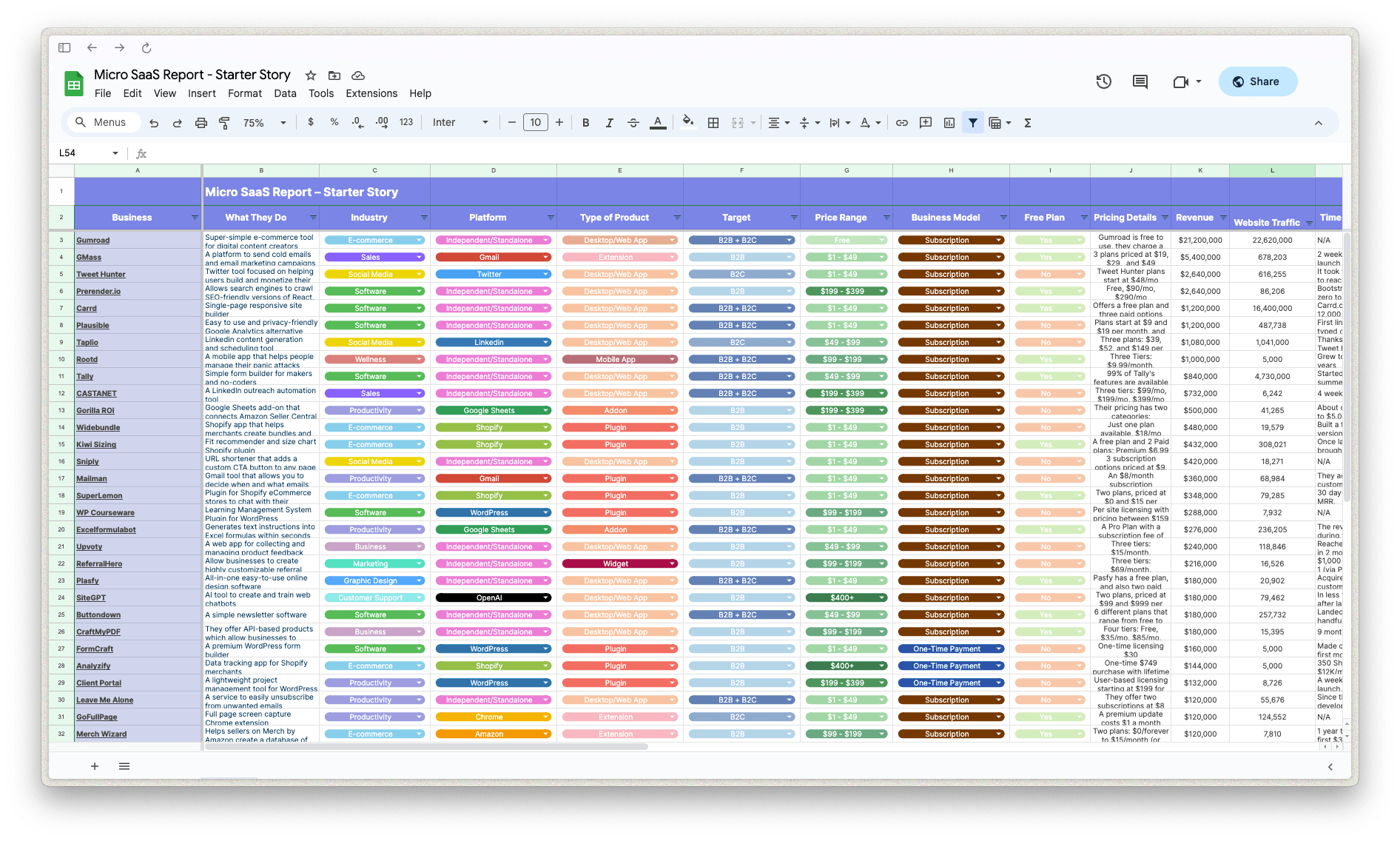

Download the report and join our email newsletter packed with business ideas and money-making opportunities, backed by real-life case studies.

Download the report and join our email newsletter packed with business ideas and money-making opportunities, backed by real-life case studies.

Download the report and join our email newsletter packed with business ideas and money-making opportunities, backed by real-life case studies.

Download the report and join our email newsletter packed with business ideas and money-making opportunities, backed by real-life case studies.

Download the report and join our email newsletter packed with business ideas and money-making opportunities, backed by real-life case studies.

Download the report and join our email newsletter packed with business ideas and money-making opportunities, backed by real-life case studies.

Download the report and join our email newsletter packed with business ideas and money-making opportunities, backed by real-life case studies.

Download the report and join our email newsletter packed with business ideas and money-making opportunities, backed by real-life case studies.